Table of Contents

As easy as it is to extract data from the web, there is a lot of noise out there. Data could be duplicated, irrelevant, inaccurate, incorrect, corrupt, or just don’t fit your analysis.

How do you move from data quantity to data quality? Let’s start by saying this is an expensive process. It doesn’t mean you shouldn’t do it, but you should adjust your process given the resources you have.

There are simple steps that you can take to increase the data quality.

1. Remove empty and duplicates

If you somehow collected all the links from AdWeek.com for a competitors analysis, the first thing you need to do is to remove empty and duplicated values.

# Remove empty values

df['site_link'].replace('', np.nan, inplace=True)

df.dropna(subset=['site_link'], inplace=True)

# Drop duplicates, keep the first

df_no_dupes = df.drop_duplicates(subset="site_link", keep='first')2. Remove irrelevant values

What’s irrelevant for your analysis? Links like “https://www.adweek.com/1993/01/” don’t represent anything for your analysis. You want to keep links that mean something.

This is when you need to put your PM hat and come up with a simple algorithm to detect irrelevant values. For example, URLs that don’t have more than five words could be removed.

# Irrelevant observations

def is_irrelevant(link):

if len(link.split("-")) < 5:

return False

return True

df_no_dupes['is_irrelevant'] = df['site_link'].apply(is_irrelevant)

# remove irrelevant rows

df_no_irrelevant = df_no_dupes[df_no_dupes.is_irrelevant == False]3. Normalize your data

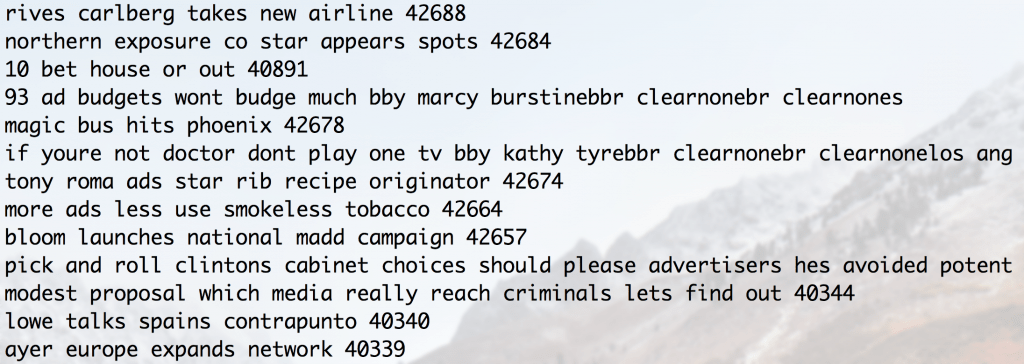

This is moving a bit forward from data cleaning. Just links won’t tell you much; you are interested in the words being used in the links. You can write a simple algorithm to extract the words from the links.

# Data normalization

def extract_words(link):

if link.endswith('/'):

link = link[:-1]

c = link.split('/')

w = c[len(c) - 1]

ws = w.replace('-', ' ')

return ws

# remove irrelevant rows

df_no_irrelevant['words'] = df_no_irrelevant['site_link'].apply(extract_words)Below is the resulting dataset after performing the data cleaning.

And here you can find the complete python example with all the steps.

As part of the data normalization, you can fill missing values too.

Now your data quality is high, and you are ready for a long analysis session with a nice cup of coffee.

- From SaaS to AI Agents - 05/27/25

- The AI Automation Engineer - 05/13/25

- Hire One Developer to Press One Key - 05/06/25